Joint probability modelling

This post documents the inner workings of a statistical model (Restricted Boltzmann Machine) for modelling the joint probability of multiple random variables (R.V.s). In many real life scanarios, getting to grips with a statistical model can often give us an upper hand. In some cases we are given some data and have to work out a statistical model for it. RBMs does exactly that for multiple R.V.s. The challenge is how do we configure an RBM, which behavior depends on its parameters, to best describe the statistics of the data. Project code written in Python can be found here

Restricted Boltzmann Machines

RBM is a way of describing a joint probability of a number of random variables (two vectors , and , say), such that the probability of a particular case (), written as or simply , is proportional to the exponential of the weighted sum of these variable instances:

with

The weighted sum of the random variables is known as the energy function and the normalisation constant (the “partition function”) is given by summing all the possibilities of :

, with being the sample space of all possible combinations of .

To compute , we thus have to evaluate for all possible values of and sum it. This summation becomes a multiple integral for continuous, real valued . The definition of the partition function as a sum over the sample space ensures that each probability term defined by the RBM is and all probabilities sum up to unity (more general family of densities can be found here. The overall probability of our R.V.s for the RBM, is thus given by:

so to find the probability of a particular pair occurring, we simply substitute the pair into the above formula. The practical difficulty is the evaluation of the partition function requiring summation across the entire sample space. The structure of the RBM (as exponential of a weighted sum) also implies that any hidden R.V. in and visible R.V.s in are independent from each other in the same group when the other group is given:

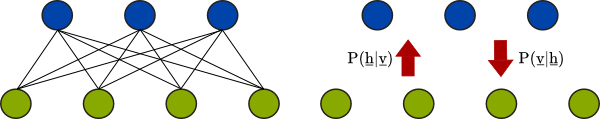

This property of RBMs simplifies the sampling process. Effectively we use these conditional probabilities to generate the hidden R.V.s from given visible R.V.s and generate visible R.V.s from the previously generated hidden R.V.s:

Here we illustrate a small fully connected RBM, larger RBMs are not necessarilly fully connected.

For now we treat all R.V.s in both hidden, visible layers as discrete taking 1 or 0 as values. i.e. , and with probability defined above. Turns out in the case of binary R.V.s, writing the probability as a normalised exponential of the energy function of the random variables can represent any distribution of binary vectors. This is by tuning the parameters of the distribution . The process of learning the distribution of a data set is thus tuning these parameters systematically such that the model approaches the statistics of the data.

In neural networks we can look at each individual neuron and characterise it with a firing probability given the states of all other R.V.s. This can be defined as the sigmoid of the weighted sum of other R.V.s:

Previously we have looked at the RBM by defining the joint probability of all R.V.s in one equation. If we extract the probability of each R.V. to be equal to unity:

, and

we can see the equivalent with Neural Networks. Consider the probability of a particular visible R.V. equals to 1:

,with representing all other visible R.V. apart from

Following conditional probability rule , we have:

The last expression is derived from total probability theory. Recall that all probabilities are in the form of so the troublesome term actually cancels out in the fraction, leaving:

dividing by the numerator, we have:

and similarly:

which is a sigmoid function of weighted sums of other weights! so the probability of a particular visible R.V. equals to one can be written as a sigmoid of weighted sums of all hidden R.V.s and a “bias” . This is exactly like defining a neural network with the firing probability as the sigmoid of the weighted sum of states in the hidden layer. We can go from a joint distribution defining the RBM to the individual probabilities of each R.V. reminiscent of a NN definition because the probabilities are defined as exponential functions. This allows us to simplify the probabilities with the sigmoid function, which is also based on exponentials. Despite the joint probability having the complicated partition function , the conditional probabilities are independent of . This allows us to sample from the joint probability using Gibbs sampling, which we’ll demonstrate in the code.

RBM unsupervised learning

Looking at the RBM topology again, there are no intra-layer connections (conditional probabilities). For general unsupervised learning, when we are given a set of example data , with unknown distribution. The task of the learning process is to model the distribution of the given data set. Often we have a model that can be used to fit the underlying distribution of the examples. To make our life easier, we have:

- A fixed graphical structure in the model, hopefully mirroring the inter-dependencies of the data (if you know, or make an intuitive guess) e.g. RBM structure assumed. By defining a structure, one can figure out a set of analytical/computational tools to handle these specific structures (conditional probabilities)

- some parameters in the model so we can tune to best estimate the underlying distribution of the example data set. e.g. for RBM it’s in the energy function.

so how we do this with the RBM, learning the statistics from the sampled data? Tuning the parameters such that it best fits the underlying distributino of the sampled data ? The difficulty is that RBM have hidden variables , and data for this is not provided directly. Having these hidden R.V.s in the model are in fact an advantage, giving RBM the ability to capture inter-dependent (conditional) probabilities between the visible R.V.s. The input samples are generated by an unknown process, this makes it difficult to find out how well the RBM approximates the true underlying. One simple way to look at it is we tune the RBM (essentially defines a joint probability of R.V.s) parameters such that when we sample from the RBM joint distribution (i.e. using the RBM as a generative model), the probability of getting those samples back is maximum. Mathematically this is written as maximising the product (“and” relation) of probabilities of getting a particular example given parameters :

we label this quantity as , to explicitly state that we want the parameters that maximises the probability of getting the examples . The probability of getting the ith example vector can also be written as a product of probabilities of individual elements in the ith example vector

i.e.

to write this as a sum of terms, we take the natural logarithm:

This way to maximising the probability of getting the training inputs when we run our model is like minimising the “distance” between our model , and the unknown input distribution , say, is known as the maximum-likelihood estimation.

One way of formally measuring the difference between the example distribution and our model distribution (strictly speaking this should be written as but we include the hidden vectors as they’re part of the RBM model) is the Kullback-Leibler divergence (KL-divergence):

The first term is the example distribution entropy, which is fixed for a target distribution. The second term involves our model distribution , or in the RBM case. To minimise the KL-divergence between the example and model distributions we need to minimise the first term and maximise the second term as entropy functions in the form is always . So how good the model approximates the example data depends on the example distribution, as distributions with lower entropy are easier to model (as they are “less random”) and others with higher entropy are harder to fit. For our model, we need to maximise the term . This is impractical to evaluate so we maximise the probability of getting the examples instead. i.e.

if our parameters maximises this then our model is “tuned” to the examples with “maximum-likelihood estimation” and achieved minimum KL-divergence between the example distribution and the model distribution. Now let’s look at the probability of running the model and generating the example vectors in detail:

We obtained the above expressions by substituting the example vector into the probability expression and summing over all outcomes of , at the same time noting the partition function is the exponential term summed over all possible values of . Both summations involves a lot of calculations to step through. The partition function is not constant and needs to be re-calculated as we vary the parameters during training.

So we want to tune the parameters to maximise the log probability of examples appearing from the model. The log probability is maximised when it’s derivative with respect to the parameters is zero:

absorbing the total sum in the denominators:

It is obvious that the coefficient of in the second summation on the RHS is the probability . The coefficient of in the first summation of the RHS can also be thought of as the probability (an exponential function of and normalised by a summation over all possible .)

Thus the derivative of the log probability can now be written as:

the respective summations are across all possible values of the corresponding R.V.s, we recognise these are expectation operations in the form of

now we have:

We simply substitute appropriate samples of into the expressions of . the expressions of can be analytically derived as we have the energy function as a polynomial of the visible and hidden vectors and the parameters as coefficients.

With the probability distribution dependent on the parameters .

To analytically calculate the true mean values one would need to sum (or integrate) over the entire sample space. Even for the second expression where we’re only concerned with all the possibilities of the hidden variable this would be very tedious if not impossible to carry out. In practice we can estimate the respective means by drawing samples from the distributions and . However, since both distributions involve the partition function , which again requires a summation over the entire sample space to compute, we cannot obtain samples from the probability expressions directly. Instead we rely on Monte-Carlo simulations to generate approximate samples for approximating the mean terms. This approximation works in practice as in most cases we do not need the precise gradient to maximise the cost function. A gradient with the correct sign is sufficient for us to we make adjustments to the magnitude of the gradient to achieve better convergence.

Gradient ascent training

Specifically, Markov-Chain Monte-Carlo (MCMC) is a computational technique for generating samples from a known, but complicated distribution. Let’s say this distribution is , with being a vector of R.V.s. By taking arbitrary initial values of the R.V.s., we write them as and suppose we can draw a sample from the distribution , and subsequently draw samples from the probability . If we are able to continue this process of drawing samples from the conditional probabilities , then according to the law of large numbers, eventually the samples drawn will resemble samples drawn from despite all we did was drawing samples from the conditional probabilities. This will happen regardless of the initial values . Recall that generating samples from the RBM probability directly is problematic because of the complicated partition function but the conditional probabilities and are readily available as simple expressions. We can start with an arbitrary visible R.V. set and generate hidden R.V.s according to the newly generated hidden R.V.s are then used to generate a new set of visible R.V.s according to . This process is repeated and as we go through more iterations, the generated samples will resemble more of the samples generated by the RBM probability . So despite we cannot evaluate the joint probability , the process of MCMC simulation allows us to draw approximate samples from the RBM. We use these MCMC samples in the gradient equation to approximate the expectation and hence the gradient. We then update the parameters according to the gradient as to maximise the probability of obtaining the training examples with our model. i.e.

and

Practically the update rate is a small number (e.g. 0.001). This process of updating the parameters by a small amount proportional to the gradient at each iteration in order to maximise the probability is known as gradient ascent.

So we know the MCMC process will eventually ‘converge’ if we run enough simulation steps. i.e. the generated samples from the conditional probabilities will resemble samples generated by the joint probability. But how many simulations is enough? Theory only guarantees the simulation will converge after a large number of steps but does not allow us to predict the number of simulations required. Thankfully, the exact gradient is not required for the gradient ascent algorithm to work. A gradient estimate by running a few MCMC steps will be sufficient to guide the parameter update in the right direction. In our experiments, a running average of estimated gradients over consecutive sets of training examples seems to work better than using the gradient estimate directly. In the extreme case a single MCMC step can be carried out, exploiting the fact that the gradient involves expectation terms. These are approximated with expectations of the conditional probabilities i.e. simply substitute by and . For binary R.V.s the conditional probabilities are Bernoulli probabilities with the expected value equal to the probability of drawing a . These are given by the sigmoid functions described above in and

The trick of using the analytically calculated mean values without carrying out sampling are known as mean field updates. Whereas the process of estimating the gradient with truncated MCMC steps is known as contrastive divergence. This was one of the main breakthroughs that allowed training RBMs of usable size possible.

Modeling joint probability of real valued R.V.s

The Restricted Boltzmann Machine can model any joint distribution of binary R.V.s. The size of the RBM required to model an arbitrary binary distribution depends on the distribution itself as well as the number of R.V.s in the example data set. Indeed, the probability maximisation derived above (maximum-likelihood) is for a general energy function . The R.V.s and can be binary or real. For binary R.V.s we can use the conditional probabilities derived above in the MCMC process to estimate the gradient updates. For real visible R.V.s. with binary hidden R.V.s we can use the energy function:

just like the binary RBM, we derive the probability independent of the partition function as:

writing this in terms of the energy function and using results of standard integrals:

this is a Gaussian distribution and we write:

For the probability of a hidden R.V. depending on the visible R.V.s, we have:

substituting in the energy function and evaluating the total probability of the hidden random variable:

which simplifies to:

To evaluate the probability of we set in the numerator on the RHS:

We now have the conditional probabilities to approximate samples from to estimate the means for the gradient updates.

From math to code: practical aspects

RBMs with real visible units are known to be problematic to train. I have attempted an implementation with the Python/Theano (0.7) framework, which allows fast execution on a GPU. The script is written from scratch and a few tricks were experimented with training the RBM. The aim of the experiment was to learn pixel statistics from a face image data set (MIT CBCL). To speed up execution, Amazon EC2 GPU instances with the ipython notebook environment were used for development. (I used cuda 7.0, cuda 7.5 is also available at time of writing.) The simulated RBM consists of 361 (19x19) real visible units and 256 hidden binary units. Simulating the the fully connected RBM with 361 units on a GPU helped a lot in shortening the implementation time, particularly when a number of simulations were carried out to find good learning rates and initialisation parameters. The Theano 0.7 library supports GPU with 32bit floating point numbers. The trick is to ensure all data were defined as float32. For float64 data Theano 0.7 defaults to using the CPU.

All 2400 images in the training set were used for training. All pixel data were normalised to the range . 50 images per mini-batch were fed to the network at a time for each gradient update. i.e. each gradient updates were computed with the average gradient over respective approximated gradients from each example in the mini-batch.

The network was trained for 2500 epochs. 15 iterations of the Monte Carlo chain were used for each traing example. Update rates for parameters and were set to 0.001 whereas update rates for parameters and in were both set to 0.00005. Each mini-batch took less than 10 seconds to train on an EC2 instance with a GRID 520 card.

The activity of the hidden units are also “sparcified” by including an extra term in the cost function to penalise the difference between a target average firing rate and the actual mean firing rate computed from the training examples:

where the mean activation can be calculated from all examples in a mini-batch:

A running gradient average RMSprop was used to smooth out the gradient updates during simulation. I found this smoothing was necessary for the training to work without other “momentum” methods. The combination of mini-batch/RMSprop also drastically improved the convergence rate and the network converges to a local minimum with a lot less iterations compared to vanilla gradient ascent.

During the simulation, the “reconstruction error” was used as a benchmark for convergence progress. The reconstruction error essentially calculates the difference between the initial samples (which are the example images) of the MC chain and the result generated by the network after a few MC samples. The reconstruction error does not measure the probability cost directly but large reconstruction errors indicates the network model is significantly different than the training data distribution. (For Binary visible units, Annealed Importance Sampling estimation can be derived and used to estimate the probability cost function directly.)

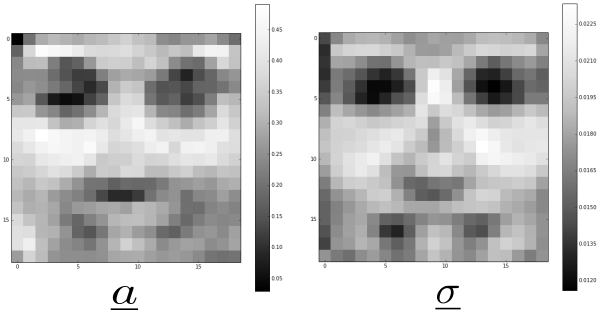

The parameters can be thought of as the “mean” of the model whereas the parameters can be thought of the deviation from the mean when the RBM is used as a generative model. For image data experiments, we can visualise the parameters and and they should resemble the average of the training dataset. Typical parameters and after 2500 epochs are shown below:

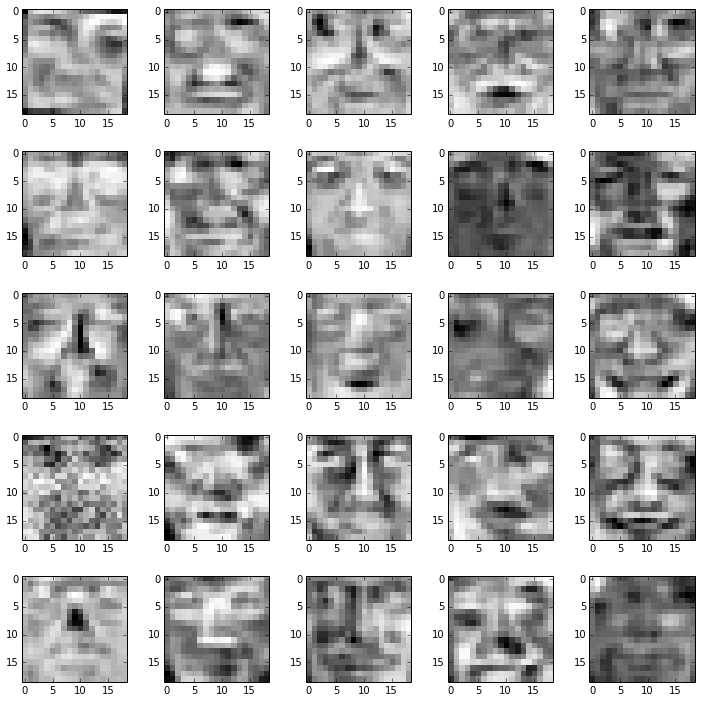

The generated images have less variety compared to the original data set. This might be due to the fact that we have a fully connected network. A multilayer deep network with localised connections for each hidden unit should work better in picking out the subtle features from the images in the training set. These samples are generated after 2500 epochs of training. Further training the network results in the generation of more homogeneous faces.

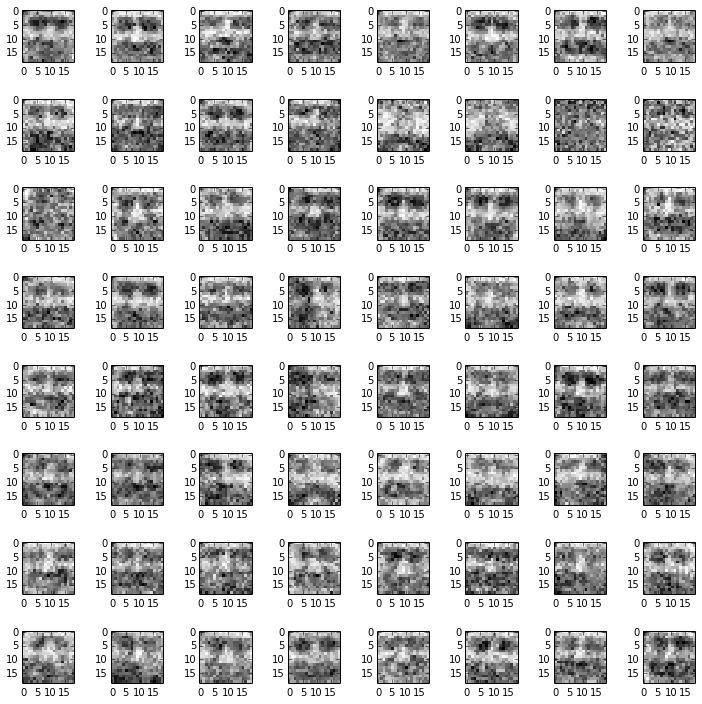

The parameters resembles receptive fields in the sense that these are the preferred inputs to activate the hidden units. A few typical plots of for fixed hidden unit and are shown below:

How to use the code

A brief guide to methods and parameter settings can be found with the source’s README.md

References

For RBM with real visible variables:

-

“Modeling human motion using binary latent variables” by Taylor and colleagues.

-

“Improved learning of Gaussian-Bernoulli Restricted Boltzmann Machines” by Cho and colleagues.

Training tricks:

-

I picked up RMSprop from Geoffrey Hinton’s video lecture, also mini-batch training and Rprop.

-

“A practical guide to training Restricted Boltzmann Machines” by Geoffrey Hinton provided lots of tips for training RBMs, including the sparcification of the hidden units I’ve used here.